Introduction

In python, we have discussed many concepts and conversions. But sometimes, we come to a situation where we need to flatten the data frames/RDD. In this tutorial, we will be discussing the concept of the python flatMap() function in the PySpark module. The flatMap() function is used to flatten the data frames/RDD.

What is RDD?

The RDD stands for Resilient Distributed Data set. It is the basic component of Spark. In this, Each data set is divided into logical parts, and these can be easily computed on different nodes of the cluster. They are operated in parallel.

Example for RDD

In this example, you will get to see the flatMap() function with the use of lambda() function and range() function in python. Firstly, we will take the input data. Then, the sparkcontext.parallelize() method is used to create a parallelized collection. Through this, we can distribute the data across multiple nodes instead of depending on a single node to process the data. Then, we will print the data in the parallelized form with the help of for loop. Let us look at the example for understanding the concept in detail.

NOTE : Firstly, You have to install PySpark from the google to run all these code or programs

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('SparkByExamples.com').getOrCreate()

input_data = ["Python Pool",

"Latracal Solutions",

"Python pool is best",

"Basic command in python"]

rdd=spark.sparkContext.parallelize(input_data)

for ele in rdd.collect():

print(ele)

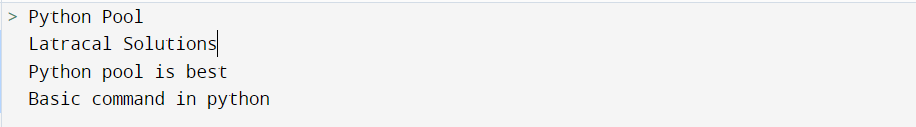

Output:

Explanation:

- Firstly, we will take the input in the input_data.

- Then, we will apply sparkContext.parallelize() method.

- And with the help of for loop, we will print the output by applying the method.

- Hence, you can see the output.

What is flatMap() function?

The flatMap() function PySpark module is the transformation operation used for flattening the Dataframes/RDD(array/map DataFrame columns) after applying the function on every element and returns a new PySpark RDD/DataFrame.

Syntax

RDD.flatMap(f, preservesPartitioning=False)Example of Python flatMap() function

In this example, you will get to see the flatMap() function with the use of lambda() function and range() function in python. Firstly, we will take the input data. Then, the sparkcontext.parallelize() method is used to create a parallelized collection. We can distribute the data across multiple nodes instead of depending on a single node to process the data. Then, we will apply the flatMap() function, inside which we will apply the lambda function. And then, we will print the element of input data with the help of for loop. Let us look at the example for understanding the concept in detail.

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('SparkByExamples.com').getOrCreate()

input_data = ["Python Pool",

"Latracal Solutions",

"Python pool is best",

"Basic command in python"]

rdd=spark.sparkContext.parallelize(input_data)

rdd2=rdd.flatMap(lambda x: x.split(" "))

for ele in rdd2.collect():

print(ele)

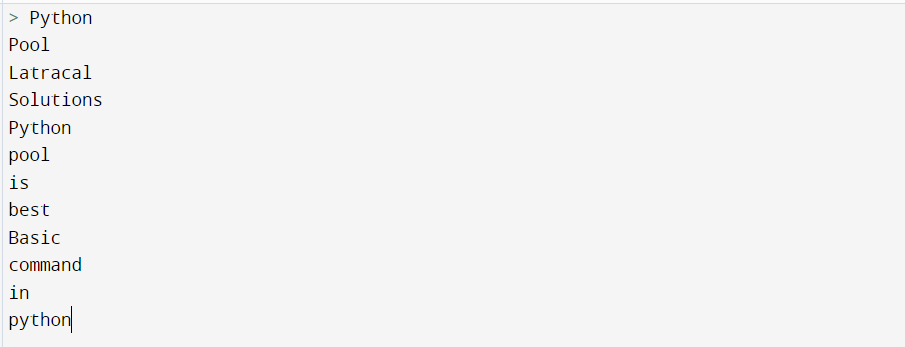

Output:

Explanation:

- Firstly, we will take the input in the input_data.

- Then, we will apply sparkContext.parallelize() method.

- After that, we will apply the flatMap() function with the lambda function inside it.

- At last, print the element with the help of for loop.

- Hence, you can see the output.

Complete Python PySpark flatMap() function example

In this example, you will get to see the flatMap() function with the use of lambda() function and range() function in python. Firstly, we will take the input data. Then, the sparkcontext.parallelize() method is used to create a parallelized collection. We can distribute the data across multiple nodes instead of depending on a single node to process the data. Then, we will apply the flatMap() function, inside which we will apply the lambda function. And then, we will print the element of input data with the help of for loop. Let us look at the example for understanding the concept in detail.

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('SparkByExamples.com').getOrCreate()

input_data = ["Python Pool",

"Latracal Solutions",

"Python pool is best",

"Basic command in python"]

rdd=spark.sparkContext.parallelize(input_data)

for element in rdd.collect():

print(element)

print("\n")

rdd2=rdd.flatMap(lambda x: x.split(" "))

for ele in rdd2.collect():

print(ele)

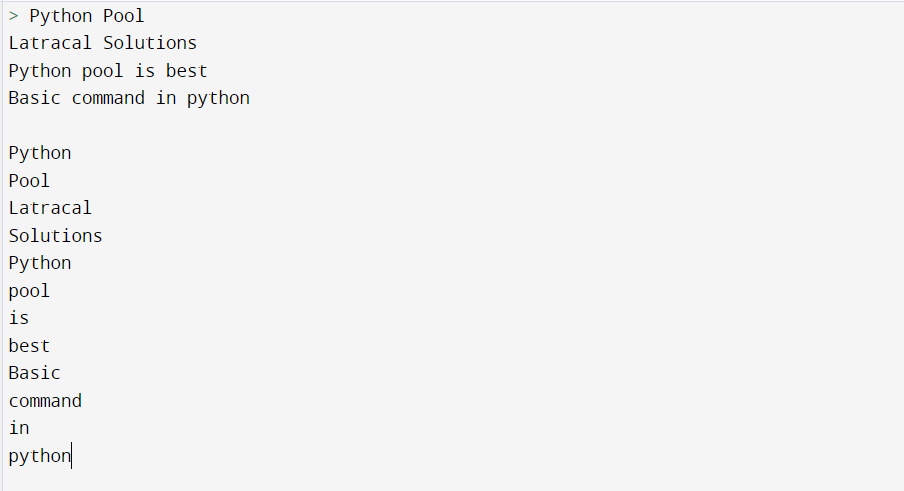

Output:

Explanation:

- Firstly, we will take the input in the input_data.

- Then, we will apply sparkContext.parallelize() method.

- And with the help of for loop, we will print the output by applying the method.

- Then, we will print the output after applying the sparkContext.parallelize() method.

- After that, we will apply the flatMap() function with the lambda function inside it.

- At last, print the element with the help of for loop.

- Hence, you can see the output.

Some more example of flatMap() function

1. Using range() in flatmap() function

In this example, you will get to see the flatMap() function with the use of lambda() function and range() function in python. sparkcontext.parallelize() method is used to create a parallelized collection. We can distribute the data across multiple nodes instead of depending on a single node to process the data. Then, we will apply the flatMap() function, inside which we will apply lambda function and range function. Let us look at the example for understanding the concept in detail.

R = sparkContext.parallelize([2, 3, 4])

output = R.flatMap(lambda x: range(1, x)).collect()

print(output)

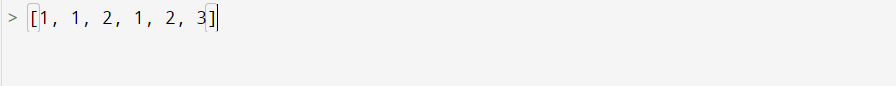

Output:

Explanation:

- Firstly, we will apply the sparkcontext.parallelize() method.

- Then, we will apply the flatMap() function.

- Inside which we have lambda and range function.

- Then we will print the output.

- The output is printed as the range is from 1 to x, where x is given above.

- So first, we take x=2. so 1 gets printed. Then, x=3 so 1 and 2 get printed and then x=4, so 1,2,3 gets printed.

- Hence, you can see the output, and if you want them in sorted order, you can apply the sorted function also.

2. Making pairs with using lambda() function

In this example, you will get to see the flatMap() function with the use of lambda() function and range() function in python. sparkcontext.parallelize() method is used to create a parallelized collection. We can distribute the data across multiple nodes instead of depending on a single node to process the data. Then, we will apply the given function, inside which we will apply the lambda function. Let us look at the example for understanding the concept in detail.

R = sparkContest.parallelize([2, 3, 4])

output = R.flatMap(lambda x: [(x, x), (x, x)]).collect()

print(output)

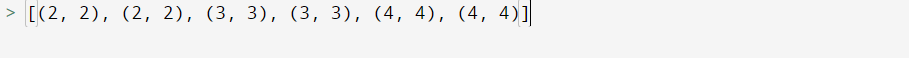

Output:

Explanation:

- Firstly, we will apply the sparkcontext.parallelize() method.

- Then, we will apply the flatMap() function.

- Inside which we have the lambda function.

- Then we will print the output.

- The output is printed as in the lambda part. You can see that there is written (x, x), (x, x). so firstly, (2, 2), (2, 2) gets printed, and so on.

- Hence, you can see the output.

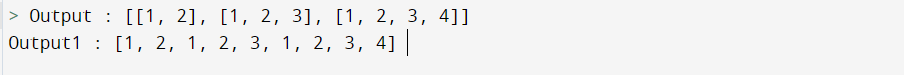

Difference between map() and flatMap() in python

map():

The map() function is used to return a new RDD by applying a function to each element of the RDD. It can return only one item.

To know how python map() function works, you can read our in-depth guide from here.

flatMap():

The flatMap() is just the same as the map(), it is used to return a new RDD by applying a function to each element of the RDD, but the output is flattened. In this function, we can return multiple lists of elements.

Let us look at the example for understanding the difference in detail.

R = sparkContext.parallelize([3, 4, 5])

output = R.map(lambda x: range(1, x)).collect()

print("Output : ",output)

S = sparkContext.parallelize([3, 4, 5])

output1 = S.flatMap(lambda x: range(1, x)).collect()

print("Output : ",output1)

Output:

Conclusion

In this tutorial, we have learned about the concept of the flatMap() function in python. We have also seen what is RDD and What is flatMap() function? Then, we have discussed some examples of the function. All the ways are explained in detail with the help of examples. You can use any of the functions according to your choice and your requirement in the program. At last, discussed the difference between map() and flatMap() function.

However, if you have any doubts or questions, do let me know in the comment section below. I will try to help you as soon as possible.