Sometimes, reading your CSV file can not be easy as you think. CSV files are generally exported by certain systems and can be very large or read. To make this task easier, we will have to deal with the numpy module in Python. If you have not studied numpy, then I would recommend studying my previous tutorial to understand numpy.

Introduction

One of the difficult tasks is when working with data and loading data properly. The most common way the data is formatted is CSV. You might wonder if there is a direct way to import the contents of a CSV file into a record array much in the way that we do in R programming?

Why CSV file format is used?

CSV is a plain-text file that makes it easier for data manipulation and is easier to import onto a spreadsheet or database. For example, You might want to export the data of certain statistics to a CSV file and then import it to the spreadsheet for further data analysis.

It makes users working experience very easy programmatically in Python. Python supports a text file or string manipulation with CSV files directly.

Reading of a CSV file with numpy in Python

As mentioned earlier, numpy is used by data scientists and machine learning engineers extensively because they have to work with a lot with the data that are generally stored in CSV files.

Somehow numpy in python makes it a lot easier for the data scientist to work with CSV files. The two ways to read a CSV file using numpy in python are:-

- Without using any library.

- numpy.loadtxt() function

- Using numpy.genfromtxt() function

- Using the CSV module.

- Use a Pandas dataframe.

- Using PySpark.

1. Without using any built-in library

Sounds unreal, right! But with the help of python, we can achieve anything. There is a built-in function provided by python called ‘open’ through which we can read any CSV file. The open built-in function copies everything that is there is a CSV file in string format. Let us go to the syntax part to get it more clear.

Syntax:-

open('File_name')

Parameter

All we need to do is pass the file name as a parameter in the open built in function.

Return value

It returns the content of the file in string format.

Let’s do some coding.

file_data = open('sample.csv')

for row in file_data:

print(row)

OUTPUT:-

Name,Hire Date,Salary,Sick Days LeftGraham Bell,03/15/19,50000.00,10John Cleese,06/01/18,65000.00,8Kimmi Chandel,05/12/20,45000.00,10Terry Jones,11/01/13,70000.00,3Terry Gilliam,08/12/20,48000.00,7Michael Palin,05/23/20,66000.00,8

2. Using numpy.loadtxt() function

It is used to load text file data in python. numpy.loadtxt( ) is similar to the function numpy.genfromtxt( ) when no data is missing.

Syntax:

numpy.loadtxt(fname)

The default data type(dtype) parameter for numpy.loadtxt( ) is float.

import numpy as np

data = np.loadtxt("sample.csv", dtype=int)

print(data)# Text file data converted to integer data type

OUTPUT:-

[[1. 2. 3.] [4. 5. 6.]]Explanation of the code

- Imported numpy library having alias name as np.

- Loading the CSV file and converting the file data into integer data type by using dtype.

- Print the data variable to get the desired output.

3. Using numpy.genfromtxt() function

The genfromtxt() function is used quite frequently to load data from text files in python. We can read data from CSV files using this function and store it into a numpy array.

This function has many arguments available, making it a lot easier to load the data in the desired format. We can specify the delimiter, deal with missing values, delete specified characters, and specify the datatype of data using the different arguments of this function.

Lets do some code to get the concept more clear.

Syntax:

numpy.genfromtxt(fname)

Parameter

The parameter is usually the CSV file name that you want to read. Other than that, we can specify delimiter, names, etc. The other optional parameters are the following:

| Name | Description |

| fname | file, file name, list to read. |

| dtype | The data type of the resulting array. If none, then the data type will be determined by the content of each column. |

| comments | All characters occurring on a line after a comment are discarded. |

| delimiter | The string is used to separate values. By default, any whitespace occurring consecutively acts as a delimiter. |

| skip_header | The number of lines to skip at the beginning of a file. |

| skip_footer | The number of lines to skip at the end of a file. |

| missing_values | The set of strings corresponding to missing data. |

| filling_values | A set of values that should be used when some data is missing. |

| usecols | The columns that should be read. It begins with 0 first. For example, usecols = (1,4,5) will extract the 2nd,5th and 6th columns. |

Return Value

It returns ndarray.

from numpy import genfromtxt

data = genfromtxt('sample.csv', delimiter=',', skip_header = 1)

print(data)

OUTPUT:

[[1. 2. 3.] [4. 5. 6.]]Explanation of the code

- From the package, numpy imported genfromtxt.

- Stored the data into the variable data that will return the ndarray bypassing the file name, delimiter, and skip_header as the parameter.

- Print the variable to get the output.

4. Using CSV module in python

The CSV the module is used to read and write data to CSV files more efficiently in Python. This method will read the data from a CSV file using this module and store it into a list. Then it will further proceed to convert this list to a numpy array in python.

The code below will explain this.

import csv

import numpy as np

with open('sample.csv', 'r') as f:

data = list(csv.reader(f, delimiter=";"))

data = np.array(data)

print(data)

OUTPUT:-

[[1. 2. 3.] [4. 5. 6.]]Explanation of the code

- Imported the CSV module.

- Imported numpy as we want to use the numpy.array feature in python.

- Loading the file sample.csv in reading mode as we have mention ‘r.’

- After separating the value using a delimiter, we store the data into an array form using numpy.array

- Print the data to get the desired output.

5. Use a Pandas dataframe in python

We can use a dataframe of pandas to read CSV data into an array in python. We can do this by using the value() function. For this, we will have to read the dataframe and then convert it into a numpy array by using the value() function from the pandas’ library.

from pandas import read_csv

df = read_csv('sample.csv')

data = df.values

print(data)

OUTPUT:-

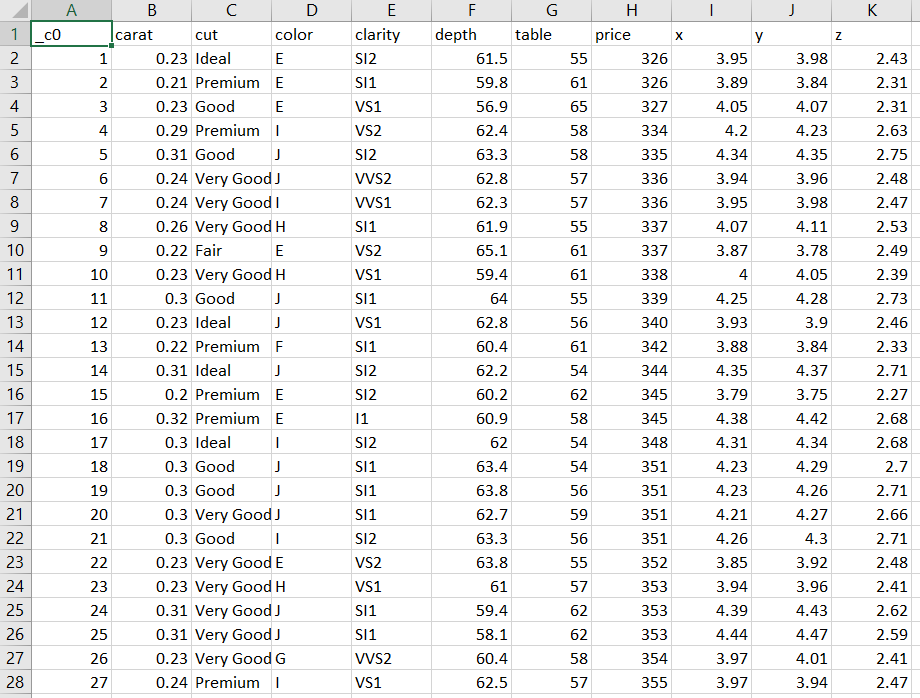

[[1 2 3] [4 5 6]]To show some of the power of pandas CSV capabilities, I’ve created a slightly more complicated file to read, called hrdataset.csv. It contains data on company employees:

hrdataset CSV file

Name,Hire Date,Salary,Sick Days LeftGraham Bell,03/15/19,50000.00,10John Cleese,06/01/18,65000.00,8Kimmi Chandel,05/12/20,45000.00,10Terry Jones,11/01/13,70000.00,3Terry Gilliam,08/12/20,48000.00,7Michael Palin,05/23/20,66000.00,8

import pandas

dataframe = pandas.read_csv('hrdataset.csv')

print(dataFrame)

OUTPUT:-

Name Hire Date Salary Sick Days Left0 Graham Bell 03/15/19 50000.0 101 John Cleese 06/01/18 65000.0 82 Kimmi Chandel 05/12/20 45000.0 103 Terry Jones 11/01/13 70000.0 34 Terry Gilliam 08/12/20 48000.0 75 Michael Palin 05/23/20 66000.0 8

6. Using PySpark in Python

Reading and writing data in Spark in python is an important task. More often than not, it is the outset for any form of Big data processing. For example, there are different ways to read a CSV file using pyspark in python if you want to know the core syntax for reading data before moving on to the specifics.

Syntax:-

spark.format("...").option(“key”, “value”).schema(…).load()

Parameters

DataFrameReader is the foundation for reading data in Spark, it can be accessed via spark.read attribute.

- format — specifies the file format as in CSV, JSON, parquet, or TSV. The default is parquet.

- option — a set of key-value configurations. It specifies how to read data.

- schema — It is an optional one that is used to specify if you would like to infer the schema from the database.

3 ways to read a CSV file using PySpark in python.

- df = spark.read.format(“CSV”).option(“header”, “True”).load(filepath).

- df = spark.read.format(“CSV”).option(“inferSchema”, “True”).load(filepath).

- df = spark.read.format(“CSV”).schema(csvSchema).load(filepath).

Lets do some coding to understand.

diamonds = spark.read.format("csv")

.option("header", "true")

.option("inferSchema", "true")

.load("/databricks-datasets/Rdatasets/data-001/csv/ggplot2/diamonds.csv")

OUTPUT:-

Conclusion

This article has covered the different ways to read data from a CSV file using the numpy module. This brings us to the end of our article, “How to read CSV File in Python using numpy.” I hope you are clear with all the concepts related to CSV, how to read, and the different parameters used. If you understand the basics of reading CSV files, you won’t ever be caught flat-footed when dealing with importing data.

Make sure you practice as much as possible and gain more experience.

Got a question for us? Please mention it in the comments section of this “6 ways to read CSV File with numpy in Python” article, and we will get back to you as soon as possible.

FAQs

Use csv.reader() and next() if you are not using any library. Lets code to understand.

Let us consider the following sample.csv file to understand.

sample.csvfruit,count apple,1 banana,2

file = open(‘sample.csv’) csv_reader = csv.reader(file) next(csv_reader) for row in csv_reader: print(row)

OUTPUT:-['apple', '1'] ['banana', '2']

As you can see the first line which had fruit, count is eliminated.

Use len() and list() on a csv reader to count the number of lines.

lets go to this sample.csv data1,2,3 4,5,6 7,8,9

file_data = open(“sample.csv”) reader = csv.reader(file_data) Count_lines= len(list(reader)) print(Count_lines)

OUTPUT:-

3

As you can see from the sample.csv file that there were three rows that got displayed with the help of the len() function.