In this technological era, the thing that holds great value is data. Data holds a humongous amount of information in itself. Commonly we use the name of Big data for it. Handling these data are one of the most challenging tasks in technical science today. Several constraints make it a difficult task to handle. Some of them are unavailability of handling resources like computers, memory, e.t.c. Recovering from this problem, users use the concept of a distributed system in which we distribute the data among several devices and then recollect it after performing the operations. Apache Spark provides one such functionality. It is the platform that helps us handle and manipulate big data according to our requirements and convenience.

Now, as it is a third-party application, we need an interface that allows us to communicate with it. Pyspark is that interface that provides communication with Apache Spark in Python. It will enable you to write Spark applications using Python APIs and provides the PySpark shell for interactively analyzing your data in a distributed environment.

To use it as per our convenience, it is much needed to set its environment as per systems requirement. pyspark_driver_python is the environmental variable to set the executing environment for Pyspark. There are many ways to set this environment variable. In this article, we will each of them.

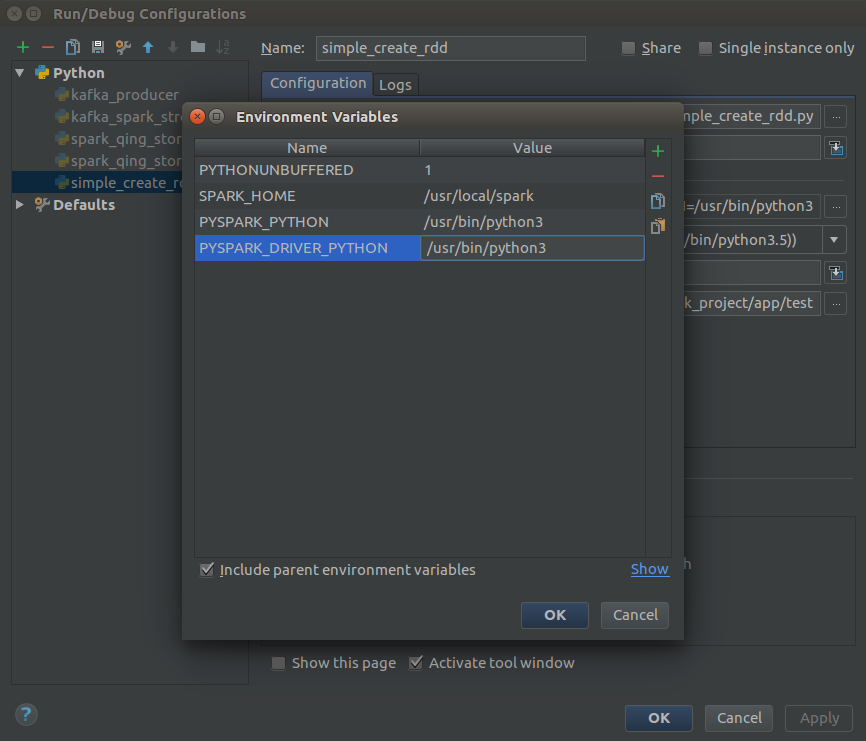

Setting pysprak_driver_python in Pycharm

To set the environmental variable in pycharm IDE, we need to open the IDE and then open Run/Debug Configurations and set the environments as shown below.

Then we need to click Ok to confirm it.

Setting pyspark_driver_python using spark-env.sh file

However, if someone is not using pycharm, they can also set the variable by this method. In this method, one should first locate the spark-env.sh file and then add some blocks of statements specifying its environments. You can find the file at SPARK_HOME/conf/spark-env.sh location. However, if the file doesn’t exist, you can rename the spark-env.sh.template as spark-env.sh. The block of statements that one needs to add is as follow:

export PYSPARK_PYTHON=/usr/bin/python

export PYSPARK_DRIVER_PYTHON=/usr/bin/pythonRecommended Reading | How to use the Pyspark flatMap() function in Python?

Setting pyspark_driver_python while using Pyspark

However, if one works with a virtual environment, it is pretty challenging to fetch the path and set it up using the above method. In that case, one can mention it before creating the pyspark session. They can do so by using the following block of codes.

import os

import sys

os.environ['PYSPARK_PYTHON'] = sys.executable

os.environ['PYSPARK_DRIVER_PYTHON'] = sys.executable

Package os allows you to set global variables; the package sys gives the string the executable binary’s absolute path for the Python interpreter.

Why we are adding python version in Pyspark_driver_Python variable?

So, the application we are developing might be simple, or sometimes it is a heavyweight larger application. One can use “–py-files” for more straightforward applications, which is enough to handle the requirements. However, the required dependencies can’t be fulfilled by –py-files for the larger application. And there are also times when we might want to use different python versions in different scenarios. In such scenarios –py-files are not helpful.

So, one might start to use the local computer in the initial stage of its development, and due to some reason, they might want to switch to the distributed system such as pyspark. To run the pre-developed model, it is necessary to configure it accordingly. Sometimes, it gets tedious to do that. In this scenario, the pyspark virtual environment helps a lot. It eases the transition from the local computer to the distributed system.

Moreover, it is not entirely an automated task. We might need to specify the versions of python we are using for developing the application and more. This is much needed when one is making the transition. However, if one builds the application from scratch on pyspark in that scenario, one also needs to provide python versions that we will use while running the application.

Setting Up Apache Spark on ArchLinux

To set the apache spark environmental variable, you need to use the following series of commands.

echo 'export JAVA_HOME=/usr/lib/jvm/java-7-openjdk/jre' >> /home/user/.bashrc

echo 'export SPARK_HOME=/opt/apache-spark' >> /home/user/.bashrc

echo 'export PYTHONPATH=$SPARK_HOME/python/:$PYTHONPATH' >> /home/user/.bashrc

echo 'export PYTHONPATH=$SPARK_HOME/python/lib/py4j-0.10.7-src.zip:$PYTHONPATH' >> /home/user/.bashrc

source ../.bashrc Conclusion

So, today in this article, we have seen how we can set the environment for using pyspark while communicating with Apache Spark. We have seen what is pyspark and what is pyspark_driver_python. Then, we have seen different ways to set the path in pyspark_driver_python environmental variables. I hope this article has helped you.

![[Fixed] typeerror can’t compare datetime.datetime to datetime.date](https://www.pythonpool.com/wp-content/uploads/2024/01/typeerror-cant-compare-datetime.datetime-to-datetime.date_-300x157.webp)