In this article, we are going to learn how to calculate the mean squared error in python? We are using two python libraries to calculate the mean squared error. NumPy and sklearn are the libraries we are going to use here. Also, we will learn how to calculate without using any module.

MSE is also useful for regression problems that are normally distributed. It is the mean squared error. So the squared error between the predicted values and the actual values. The summation of all the data points of the square difference between the predicted and actual values is divided by the no. of data points.

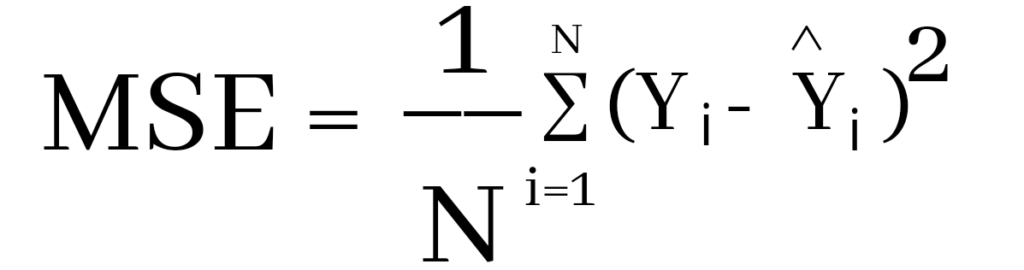

Formula to calculate mean squared error

Where Yi and Ŷi represent the actual values and the predicted values, the difference between them is squared.

Derivation of Mean Squared Error

First to find the regression line for the values (1,3), (2,2), (3,6), (4,1), (5,5). The regression value for the value is y=1.6+0.4x. Next to find the new Y values. The new values for y are tabulated below.

| Given x value | Calculating y value | New y value |

|---|---|---|

| 1 | 1.6+0.4(1) | 2 |

| 2 | 1.6+0.4(2) | 2.4 |

| 3 | 1.6+0.4(3) | 2.8 |

| 4 | 1.6+0.4(4) | 3.2 |

| 5 | 1.6+0.4(5) | 3.6 |

Now to find the error ( Yi – Ŷi )

We have to square all the errors

By adding all the errors we will get the MSE

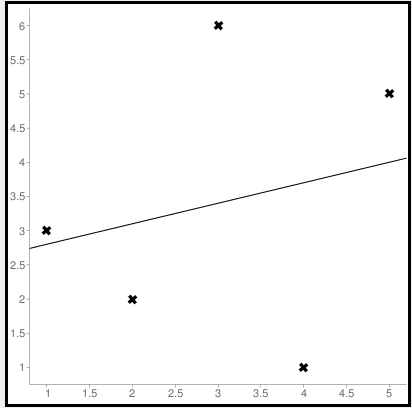

Line regression graph

Let us consider the values (1,3), (2,2), (3,6), (4,1), (5,5) to plot the graph.

The straight line represents the predicted value in this graph, and the points represent the actual data. The difference between this line and the points is squared, known as mean squared error.

Also, Read | How to Calculate Square Root in Python

To get the Mean Squared Error in Python using NumPy

import numpy as np

true_value_of_y= [3,2,6,1,5]

predicted_value_of_y= [2.0,2.4,2.8,3.2,3.6]

MSE = np.square(np.subtract(true_value_of_y,predicted_value_of_y)).mean()

print(MSE)

Importing numpy library as np. Creating two variables. true_value_of_y holds an original value. predicted_value_of_y holds a calculated value. Next, giving the formula to calculate the mean squared error.

Output

3.6400000000000006

To get the MSE using sklearn

sklearn is a library that is used for many mathematical calculations in python. Here we are going to use this library to calculate the MSE

Syntax

sklearn.metrices.mean_squared_error(y_true, y_pred, *, sample_weight=None, multioutput='uniform_average', squared=True)

Parameters

- y_true – true value of y

- y_pred – predicted value of y

- sample_weight

- multioutput

- raw_values

- uniform_average

- squared

Returns

Mean squared error.

Code

from sklearn.metrics import mean_squared_error

true_value_of_y= [3,2,6,1,5]

predicted_value_of_y= [2.0,2.4,2.8,3.2,3.6]

mean_squared_error(true_value_of_y,predicted_value_of_y)

print(mean_squared_error(true_value_of_y,predicted_value_of_y))

From sklearn.metrices library importing mean_squared_error. Creating two variables. true_value_of_y holds an original value. predicted_value_of_y holds a calculated value. Next, giving the formula to calculate the mean squared error.

Output

3.6400000000000006

Calculating Mean Squared Error Without Using any Modules

true_value_of_y = [3,2,6,1,5]

predicted_value_of_y = [2.0,2.4,2.8,3.2,3.6]

summation_of_value = 0

n = len(true_value_of_y)

for i in range (0,n):

difference_of_value = true_value_of_y[i] - predicted_value_of_y[i]

squared_difference = difference_of_value**2

summation_of_value = summation_of_value + squared_difference

MSE = summation_of_value/n

print ("The Mean Squared Error is: " , MSE)

Declaring the true values and the predicted values to two different variables. Initializing the variable summation_of_value is zero to store the values. len() function is useful to check the number of values in true_value_of_y. Creating for loop to iterate. Calculating the difference between true_value and the predicted_value. Next getting the square of the difference. Adding all the squared differences, we will get the MSE.

Output

The Mean Squared Error is: 3.6400000000000006

Calculate Mean Squared Error Using Negative Values

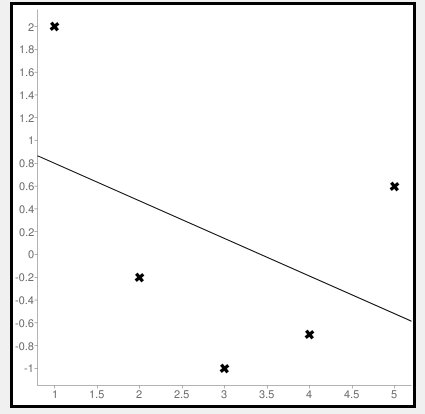

Now let us consider some negative values to calculate MSE. The values are (1,2), (3,-1), (5,0.6), (4,-0.7), (2,-0.2). The regression line equation is y=1.13-0.33x

The line regression graph for this value is:

New y values for this will be:

| Given x value | Calculating y value | New y value |

|---|---|---|

| 1 | 1.13-033(1) | 0.9 |

| 3 | 1.13-033(3) | 0.1 |

| 5 | 1.13-033(5) | -0.4 |

| 4 | 1.13-033(4) | -0.1 |

| 2 | 1.13-033(2) | 0.6 |

Code

>>> from sklearn.metrics import mean_squared_error

>>> y_true = [2,-1,0.6,-0.7,-0.2]

>>> y_pred = [0.9,0.1,-0.4,-0.1,0.6]

>>> mean_squared_error(y_true, y_pred)

First, importing a module. Declaring values to the variables. Here we are using negative value to calculate. Using the mean_squared_error module, we are calculating the MSE.

Output

0.884

Bonus: Gradient Descent

Gradient Descent is used to find the local minimum of the functions. In this case, the functions need to be differentiable. The basic idea is to move in the direction opposite from the derivate at any point.

The following code works on a set of values that are available on the Github repository.

Code:

#!/usr/bin/python

# -*- coding: utf-8 -*-

from numpy import *

def compute_error(b, m, points):

totalError = 0

for i in range(0, len(points)):

x = points[i, 0]

y = points[i, 1]

totalError += (y - (m * x + b)) ** 2

return totalError / float(len(points))

def gradient_step(

b_current,

m_current,

points,

learningRate,

):

b_gradient = 0

m_gradient = 0

N = float(len(points))

for i in range(0, len(points)):

x = points[i, 0]

y = points[i, 1]

b_gradient += -(2 / N) * (y - (m_current * x + b_current))

m_gradient += -(2 / N) * x * (y - (m_current * x + b_current))

new_b = b_current - learningRate * b_gradient

new_m = m_current - learningRate * m_gradient

return [new_b, new_m]

def gradient_descent_runner(

points,

starting_b,

starting_m,

learning_rate,

iterations,

):

b = starting_b

m = starting_m

for i in range(iterations):

(b, m) = gradient_step(b, m, array(points), learning_rate)

return [b, m]

def main():

points = genfromtxt('data.csv', delimiter=',')

learning_rate = 0.00001

initial_b = 0

initial_m = 0

iterations = 10000

print('Starting gradient descent at b = {0}, m = {1}, error = {2}'.format(initial_b,

initial_m, compute_error(initial_b, initial_m, points)))

print('Running...')

[b, m] = gradient_descent_runner(points, initial_b, initial_m,

learning_rate, iterations)

print('After {0} iterations b = {1}, m = {2}, error = {3}'.format(iterations,

b, m, compute_error(b, m, points)))

if __name__ == '__main__':

main()

Output:

Starting gradient descent at b = 0, m = 0, error = 5671.844671124282

Running...

After 10000 iterations b = 0.11558415090685024, m = 1.3769012288001614, error = 212.26220312358794Frequently Asked Questions Related to Mean Squared Error in Python

pip install numpy

pip install sklearn

The expansion of MSE is Mean Squared Error.

Conclusion

In this article, we have learned about the mean squared error. It is effortless to calculate. This is useful for loss function for least squares regression. The formula for the MSE is easy to memorize. We hope this article is handy and easy to understand.